Calibration accuracies

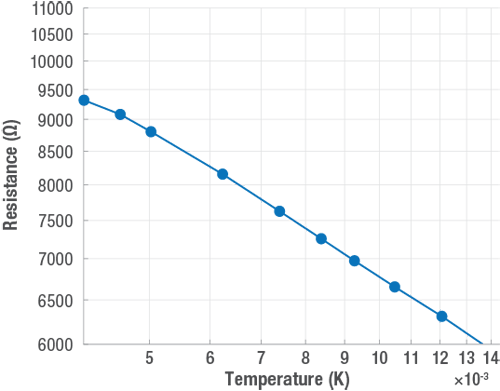

ULT Rox™ sensors go through an intensive cryogenic calibration process. Each sensor comes with a downloadable calibration curve and traceability to NIST standards. Figure 1 demonstrates an example of roll‑over behavior of a calibrated ULT Rox. Calibration ensures a roll-over point beyond the calibration limit, ensuring readings down to 5 mK. It is critical to follow the installation instructions to replicate the calibration environment and wait for the sensor to fully cool down to meet accuracies at 5 mK.

| 5 mK1 | ±1.2 mK |

| 7 mK1 | ±0.8 mK |

| 10 mK1 | ±1 mK |

| 20 mK1 | ±2 mK |

| 50 mK1 | ±4 mK |

| 1.4 K2 | ±16 mK |

| 4.2 K2 | ±16 mK |

| 10 K2 | ±30 mK |

1Calibration done utilizing a noise thermometer

2[(Calibration uncertainty)2 + (reproducibility)2]0.5

Figure 1 Example of roll-off curve of one ULT Rox sensor

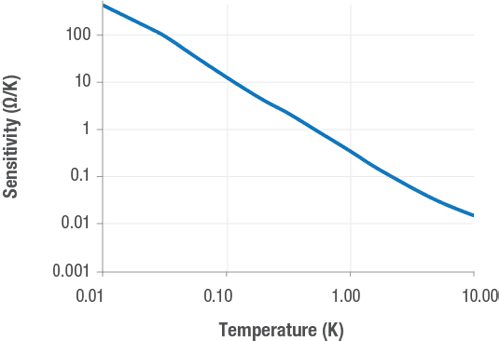

Temperature sensitivity

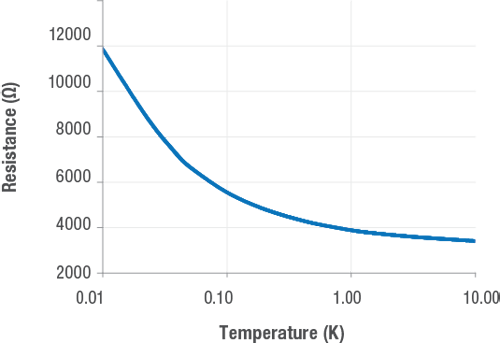

At 5 mK, ULT Rox devices typically exhibit a sensitivity of approximately 300,000 ohms/K, indicating a high responsiveness to temperature changes. Additionally, the resistance at 5 mK is around 10 kΩ. This high sensitivity means that even small temperature fluctuations result in significant changes in resistance, making the device highly effective for precise measurements in ultra-low temperature environments.

Typical sensitivity Ω/K

Figure 2 Typical sensitivity over temperature for ULT Rox sensors, showing increases responsiveness at lower temperatures

Typical resistance Ω

Figure 3 Typical resistance curve of a ULT Rox™ sensor varies from 10,000 Ω to 2,000 Ω over its operational temperature range